What Is XAI? Everything You Need to Know about Explainable AI

What is XAI? What is XAI for? How is Explainable AI different from regular AI? These are the concerns we start to hear when people have not even gotten used to crazy AI-generated videos and deepfakes on our FYPs. And now this: Explainable AI. It seems like the AI progress is moving faster than we could ever expect it to be, but here is our reality. And if your business is not on the same page with the latest advancements, you risk being forgotten, just like printed newspapers. Below, we have gathered answers to the most frequently asked questions about Explainable AI, provided a detailed step-by-step guide on how to use XAI for beginners, and actually revealed what is XAI for. Get ready for your most explainable experience with Explainable AI.

In short, today we will take a look at the following topics:

- What is XAI, and how is it different from regular AI? Unlike our well-known generative or conversational AI, Explainable AI does not just process the data and generate the output, but also provides detailed feedback, gathering information on how the model has come to the specific result. But what is XAI for? From training predictive ML models in healthcare, law, and education, to building trust in AI for users worldwide — the future-proof value of XAI is undeniable for both people and artificial intelligence.

- What are the examples of Explainable AI? SHAP and LIME stand as the cornerstones of XAI 2026. While SHapley Additive exPlanations provides mathematical calculations in both local and global explanation levels, Local Interpretable Model-agnostic Explanations help the user to explain particular predictions based on the input data.

- How to use XAI in DataRobot?

- Step 1: Upload the data you want to investigate.

- Step 2: Choose target features you would like to make predictions on.

- Step 3: Select the partitioning method you want the ML model to train in.

- Step 4: Begin the ML model training and wait for the calculations.

- Step 5: Choose the model to work with among the seven offered.

- Step 6: Conduct the automated computations and receive the API code.

- Step 7: Click "Make New Predictions" and get the CSV file with the results.

If you are ready to dive deeper into the power of Explainable AI in 2026, go on with our all-in-one article below!

What Is XAI?

XAI or Explainable Artificial Intelligence is the newly-come ability of AI to explain how it came up with specific results, conclusions, or calculations. This ability is aimed at helping people understand how exactly AI algorithms have reached one or another conclusion.

The urge for Explainable AI has been growing, along with users' increasing misunderstanding of how artificial intelligence generates specific information or conducts certain operations. As a result, XAI now exists to thoroughly explain how its algorithms reach particular insights and what factors have affected the result.

In a nutshell, Explainable AI refers to a set of AI technologies and techniques that make the general process of decision-making, research, and argumentation more transparent, understandable, and trustworthy to the users, in the first place.

In contrast, classical black-box systems are designed to deliver straightforward answers without going too deep into the details, as for the human making a request, and as for the AI system itself. This, in turn, often leads to unreasonable, questionable, or even fake results. As we are all familiar now with such a term as AI hallucinations, XAI is a huge step towards eliminating doubtful operations and answers.

It is vital to note that Explainable AI is not so commonly used in our already everyday generative AI requests. Even though ChatGPT and Gemini are actively integrating XAI models for increasing answers' authoritativeness and minimizing hallucinations or doubtful research, full transparency is yet to be achieved in traditional AI chatbots. But what is XAI for if not for justifying ChatGPT's answers to your late-night requests about a strange knee ache? For that question, we have the answer just below.

In simplest terms, XAI answers the question "Why did AI do that?"

How Does XAI Work?

Now that we have scratched the surface of "what is XAI?", it is time to dive deep into the specifics of how Explainable AI works. And below, we will divide how XAI works into several key strategies and put it simply for you.

"Black Box" Interpreting

Essentially, regular AI models, particularly deep learning ones, operate and make decisions based on the Black Box model, which means they produce results without revealing the underlying processes or methods used to arrive at them. Meanwhile, Explainable AI serves to "open" that box and reveals the detailed process of decision-making via several techniques such as:

- Highlighting the key factors influencing the final decision.

- Tracing the reasoning path taken by the AI.

- Demonstrating how the input changes have affected the output.

We usually meet this type of Explainable AI model in our premium ChatGPT or Gemini conversational AI.

Decision Trees

When we come to the "What is XAI?" topic, Decision Trees are the most commonly used and easy-to-understand approach to explain what technologies XAI uses. First and foremost, Decision Trees refer to the visual method of data interpretation that helps the user to divide the received data into separate tangible sections and subsections, which allow us to effortlessly understand and group the process of AI decision-making.

Even though the Decision Tree method is being actively used in fundamental areas of our life, like education, law, healthcare, or even gaming, Explainable AI is a whole new level of its utilization. On the one hand, XAI methods enhance Decision Trees' interpretability, while Decision Trees help us to unlock the full potential of Explainable AI and bring artificial innovation into almost any area of investigation. And here is how we can observe this proactive symbiosis:

- Feature Importance (SHAP, LIME): These XAI methods assess the importance of each feature in a Decision Tree’s prediction by quantifying the impact of features on the final decision. For instance, utilizing XAI to identify how specific metrics, such as age, blood pressure, sex, or cholesterol level, influence the likelihood of a patient's diagnosis.

- Partial Dependence Plots (PDPs): These plots illustrate the relationship between a single feature and the model’s predictions, enabling us to understand how a feature’s change (such as an increase in "age") affects the model's output (for example, the likelihood of diabetes).

- Global and Local Interpretability: In this case, Explainable AI allows us to not just see and understand the overall structure of the Decision Tree (globally) but also comprehend the separate parts of the decision-making system. For example, if a diagnosis was confirmed for a patient, LIME can show how the model considered each feature for that individual (e.g., "Rapid weight gain, sleep deprivation, high sugar levels have led us to the diabetes diagnosis").

Wondering what SHAP and LIME are? Read below to learn more about those model-agnostic XAI techniques.

Post-Hoc (After-the-Fact) Explainability

For more complex models like deep neural networks, ensemble methods, or random forests, XAI uses post-hoc techniques. These methods aim to explain the decision-making process of a model after predictions have been made. Key Post-Hoc Technologies include:

- LIME (Local Interpretable Model-Agnostic Explanations)

LIME is generally used to explain individual predictions of any black-box model by approximating it with a simpler, interpretable model (like a linear regression or decision tree) in the local vicinity of the predictions. But how does that work? LIME affects the input data (slightly changes features) and observes how these changes impact the output. The algorithm then trains a simple, interpretable model on this new data to explain how the complex model arrived at its decision.

As an example, we can take a machine learning model that is trained to predict whether a specific movie review is positive or negative. And here is how LIME would approach that. LIME takes the original review and perturbs it by making small changes, like removing a word or replacing a word with a synonym. It trains the model with those new perturbed reviews, explaining the degree of influence certain words like "amazing", "awful", "exciting", "boring", "thrilling", "dull", etc, would have.

- SHAP (SHapley Additive exPlanations)

In contrast, SHAP is a more mathematically rigorous approach based on Shapley values from cooperative game theory. It assigns a contribution value to each feature in the prediction, making it easier to understand the impact of each feature. Simply put, SHAP calculates the Shapley value for each feature by considering the model’s output for every possible combination of features and quantifying how much each feature contributes to the final decision. Getting back to our movie review examples, while LIME perturbs the input data, SHAP would provide you and an ML model with the statistics on how much certain words influence the prediction if the review is positive or negative. For instance, "exciting" and "thrilling" are 85% positive, and "boring" is 70% negative.

As now that we have passed the peak of the "What is XAI" iceberg, we are moving to the water surface and finding out what is XAI for.

What Is XAI for?

Answering the question "What is XAI?" earlier, we have already discussed the core XAI functionality as the ability to explain how AI has come to a specific conclusion, but let's now explore what is XAI for and how it can be helpful for humanity.

Building Trust & Transparency

First and foremost, Explainable AI enables users to gain trust and assurance in AI, as they are provided with a detailed and precise explanation of how the system arrived at a certain output. XAI particularly contributes to the industries requiring high levels of precision and confidence, such as healthcare, law, education, or finance, making AI tools' incorporation smooth and trustworthy.

Human Factor Eliminating

Being an unbiased lawyer, doctor, teacher, or finance specialist truly takes nerve, but XAI is a real revolution in decision-making. Explainable scripts allow people to transparently see why specific actions have led to certain decisions, and what consequences they might have. In this case, XAI enables us to avoid human factors in vital decision-making in court, hospital, or bank while providing detailed justification.

Error Detection or Debugging

Another top use of Explainable AI refers to ML models' testing and bug detection for further elimination. Advanced XAI models enable us to thoroughly test not only machine learning models but also MVPs, apps, platforms, or other systems, creating a real-life user experience and providing detailed reporting on potential pain points through XAI.

Regulatory Compliance

Getting back to the topic of incorporating AI into complicated spheres of our lives, we must mention how Explainable AI contributes to regulatory compliance and legal requirements. XAI plays a key role in ensuring that organizations meet these standards by making decisions explainable and documentable.

While most of us still ask what is Explainable AI, and what is XAI for, it is actively spreading across even the most unexpected fields of our work, health, education, or even entertainment. And here are a few more examples of what we use XAI for:

- Healthcare Diagnostics;

- Hiring & Recruitment;

- Autonomous Vehicles;

- Financial Services & Credit Scoring;

- Educational Tools;

- AI-Driven Recommendations;

- Supply Chain Management.

What Are the Examples of XAI?

In order to better understand what is XAI and how it actually contributes to the progress of global AI integration, let's take a look at the top five real-life examples of Explainable AI:

- IBM Watson as an XAI example for analyzing medical documentation, patient anamnesis, and personal data to predict potential treatment options. Widely used in oncology diagnostics and treatment.

- Zest AI is an excellent example of diversified XAI, helping users gain transparency, detect fraud, and automate tasks in marketing, finance, or even content writing.

- Waymo — Google's autonomous vehicles driven by XAI or "the world's most experienced driver" as Google states itself. Advanced decision-making systems make Waymo one of the safest ride options on the roads of Los Angeles, San Francisco, and Atlanta.

- HireVue is our future of hiring. If you think AI will never take your job, it will take your recruiter's position now. Explainable AI significantly enhances and shortens the candidate hunting period by automating most recruitment processes and eliminating the human factor.

- Progressive Insurance already implements XAI to automate the process of approving or denying your insurance claims. Now it's time to discuss your claims with AI assistants on the phone instead of waiting hours for tired CSRs.

As you can see, while we still question what is Explainable AI, global companies are making sure XAI becomes an integral part of our routine.

But what if you also want to stay ahead of the curve?! Then it is just the right time to find out how to use Explainable AI in our step-by-step guide for beginners below.

How to Use XAI? A Step-by-Step Guide for Beginners

In our guide, we selected a data sample with flight delays and conducted an experiment of machine learning model training using XAI methods on DataRobot platform to showcase to beginners how Explainable AI scripts work with extensive data.

In our case, the dataset includes features like:

- Flight number;

- Scheduled departure time;

- Scheduled departure time (hour of day);

- Date;

- Origin airport;

- Tail number;

- Carrier code;

- Take off delay minutes;

- Date (day of week).

If we are trying to predict something (e.g., the destination airport, or whether a flight will be delayed), we will want to know which factors the model is using to make that prediction. And that is the point where XAI steps in.

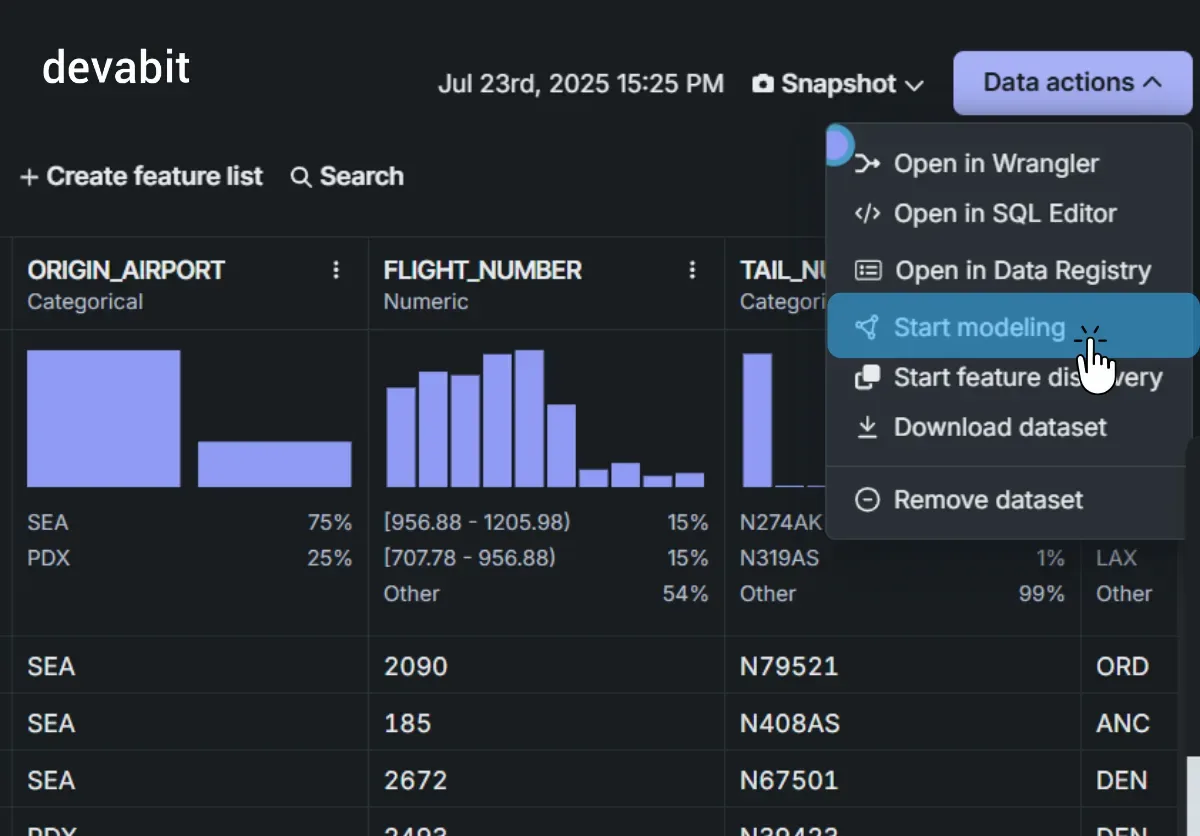

Step 1: Load & Explore the Data

First, we upload our flight delays data for its further exploration and analysis to the DataRobot Workbench in the most convenient format for you and ML model, like CSV, Excel, or JSON. Right after you have examined your data types, you are all ready to click DATA MODELING > START MODELING. This action launches the XAI engine to begin understanding your input data.

P.S. DataRobot provides a free trial period without payment info needed so that you can test your XAI utilization skills without purchasing any subscriptions.

What XAI does here:

- Identifies what types of data are available: dates, categories, numbers.

- Prepares to understand relationships in the input data.

- Learns patterns in the data.

- Helps explain what features (columns) drive the target variable.

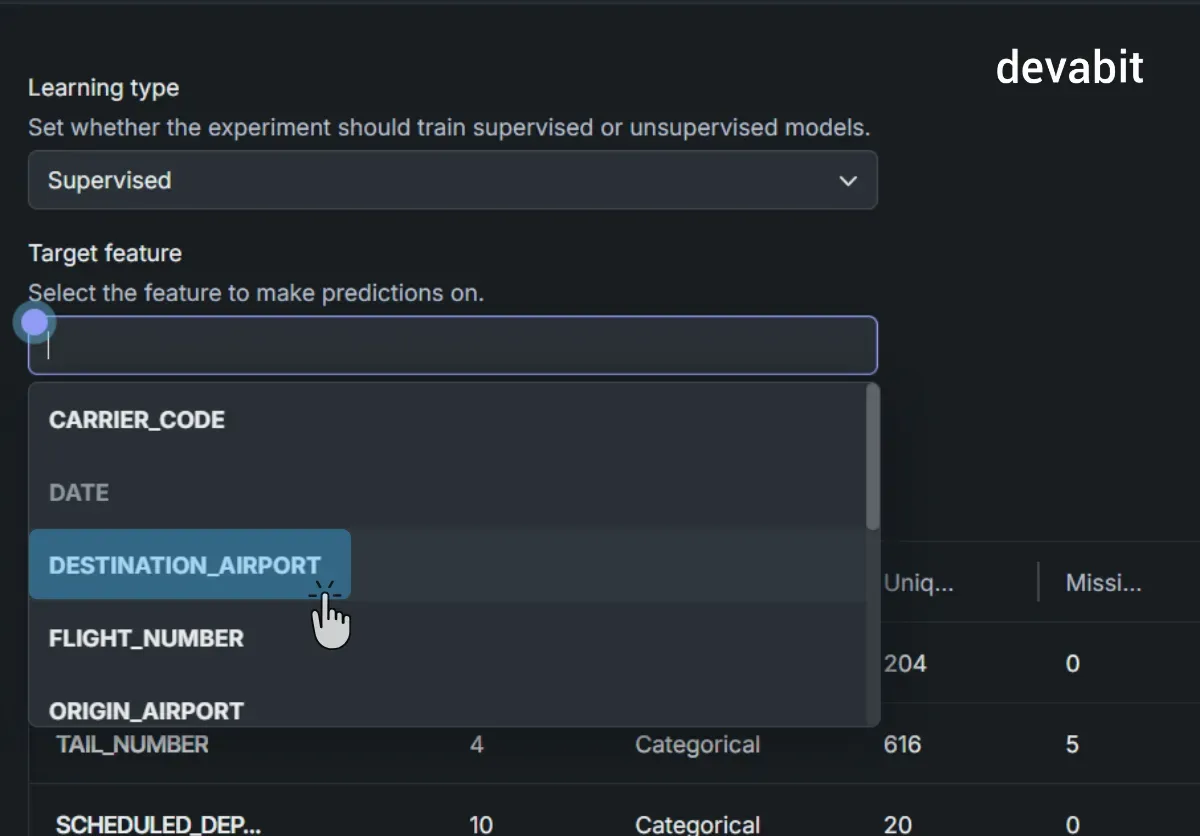

Step 2: Choose Target Features

At this point, you choose the target feature you would like to predict via XAI, e.g., DESTINATION AIRPORT in our case. This choice will help the XAI model to understand what data to focus on and what calculations and explanations to provide.

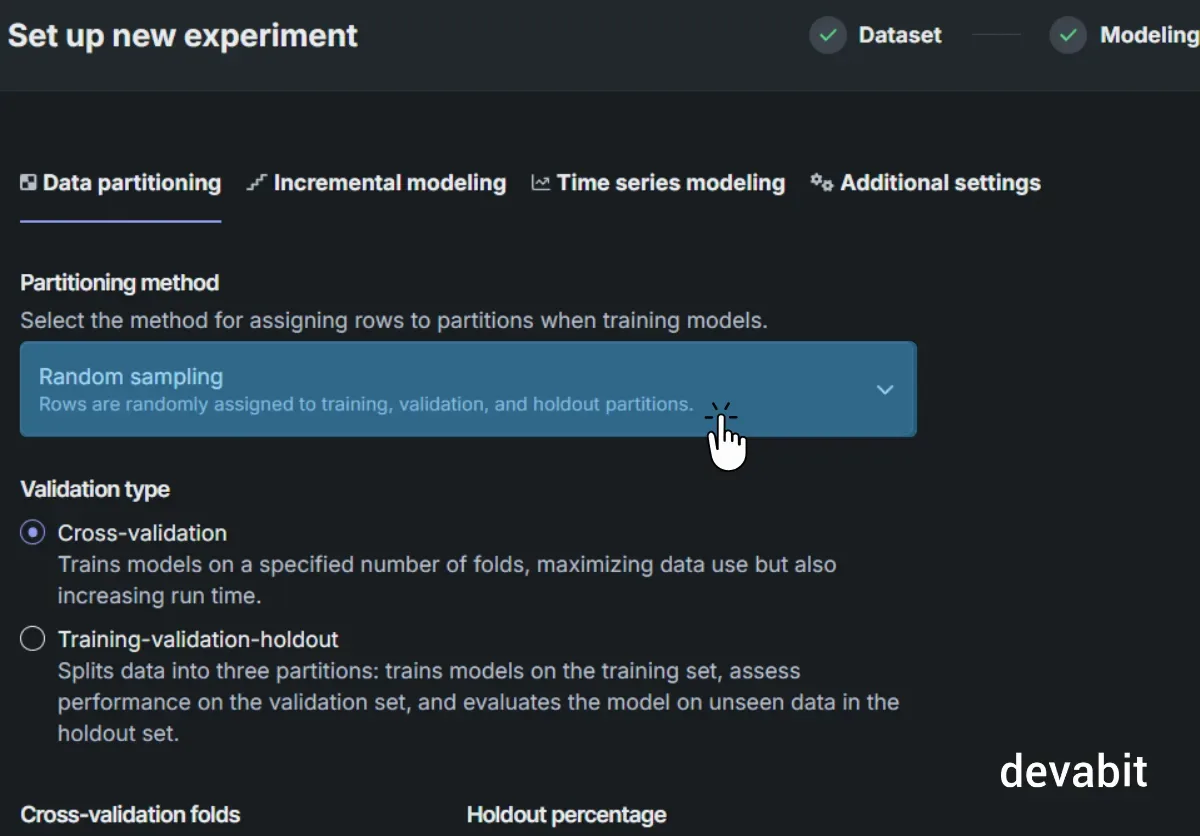

Step 3: Select Partitioning Method

At this stage, we decide to choose the RANDOM SAMPLING feature under “DATA PARTRITIONING.” That is the point where you decide what analysis you actually want the XAI to conduct for you.

This splits the dataset into:

- Training set (to learn)

- Validation set (to test during training)

- Holdout set (to test final performance)

Why it matters for our Explainable AI model: XAI explanations are only meaningful if the model is well-tested and not overfitting the training data.

Step 4: Train the Model

As all the preparations are done, you can click TRAIN THE MODEL, and the automated process begins. Once the training starts, the platform:

- Loads data

- Creates CV splits

- Prepares feature analysis

- Starts blueprint generation

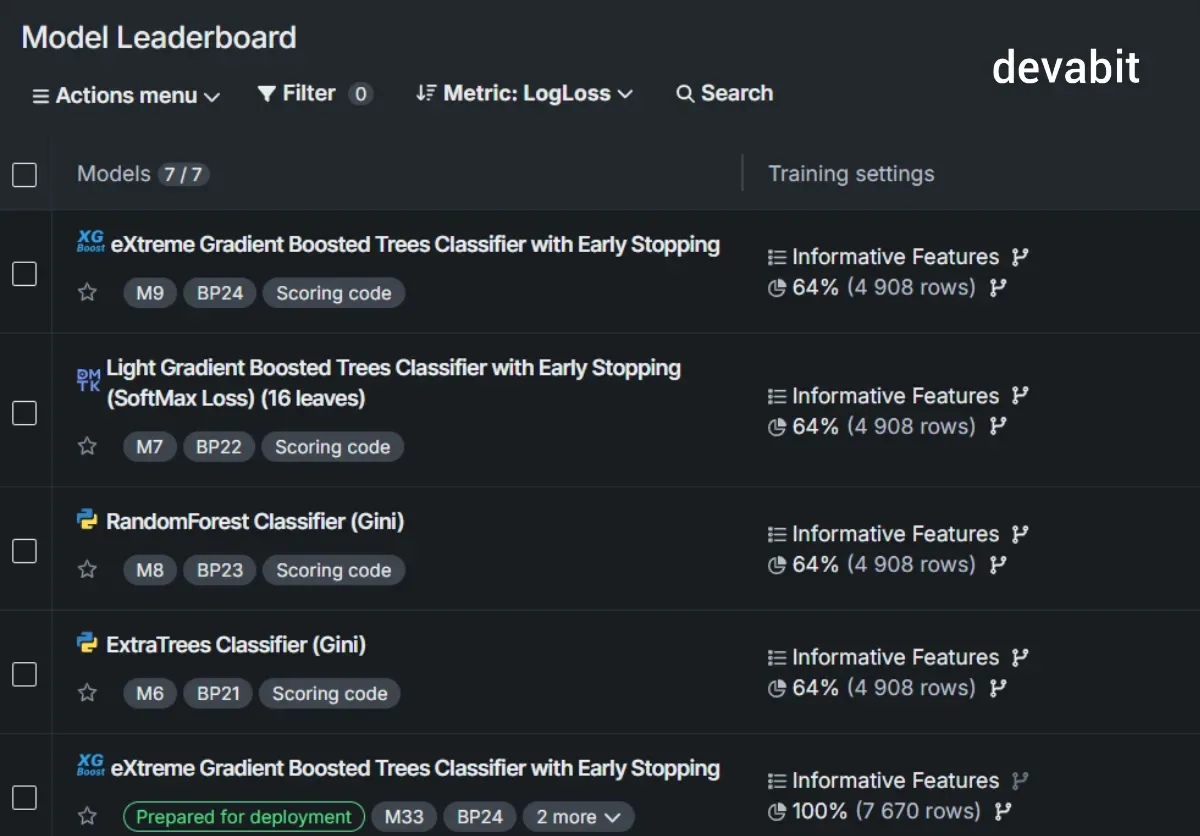

Once your training finishes (which may take a while), you receive seven different machine learning models trained in diverse XAI methods, and your Model Leaderboard should look like this:

Step 5: Choose the Model You Will Work With

As we have mentioned earlier, you will be offered seven MLM options to explore, and in this guide, we will particularly focus on Regularized Logistics Regression (L2).

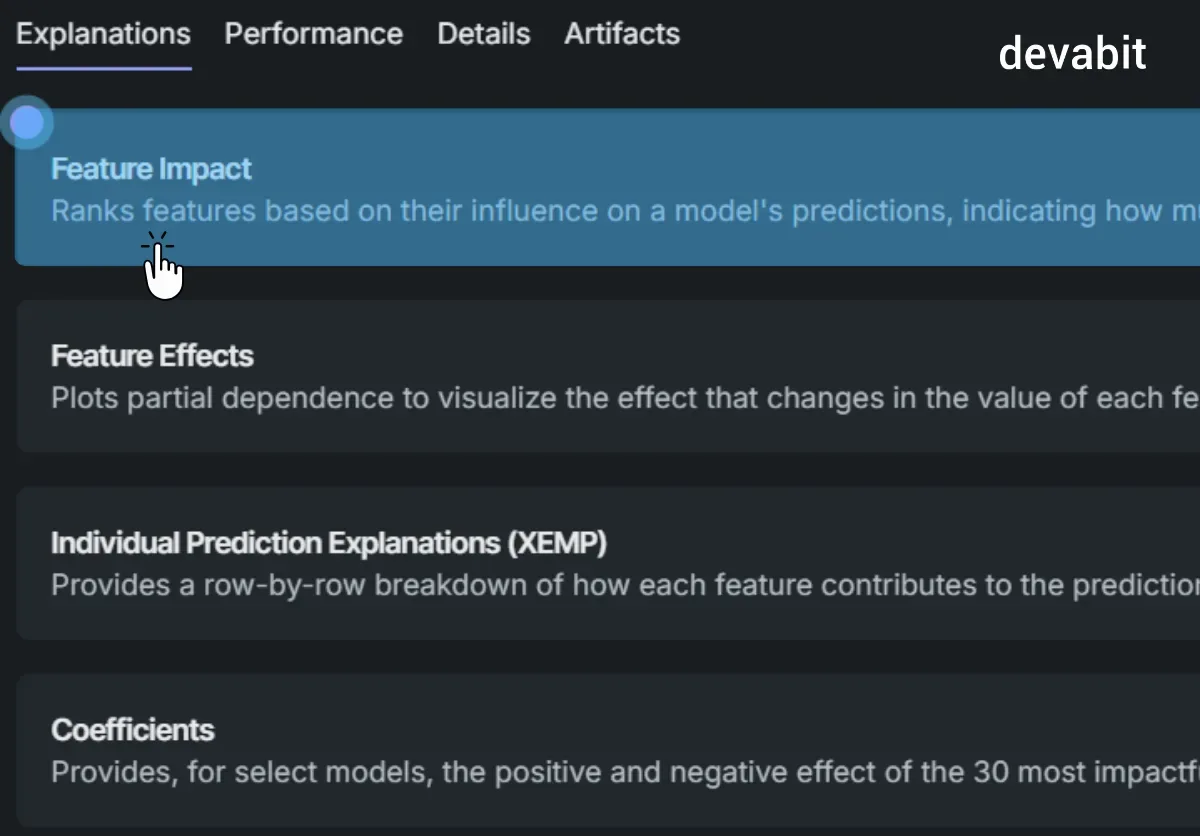

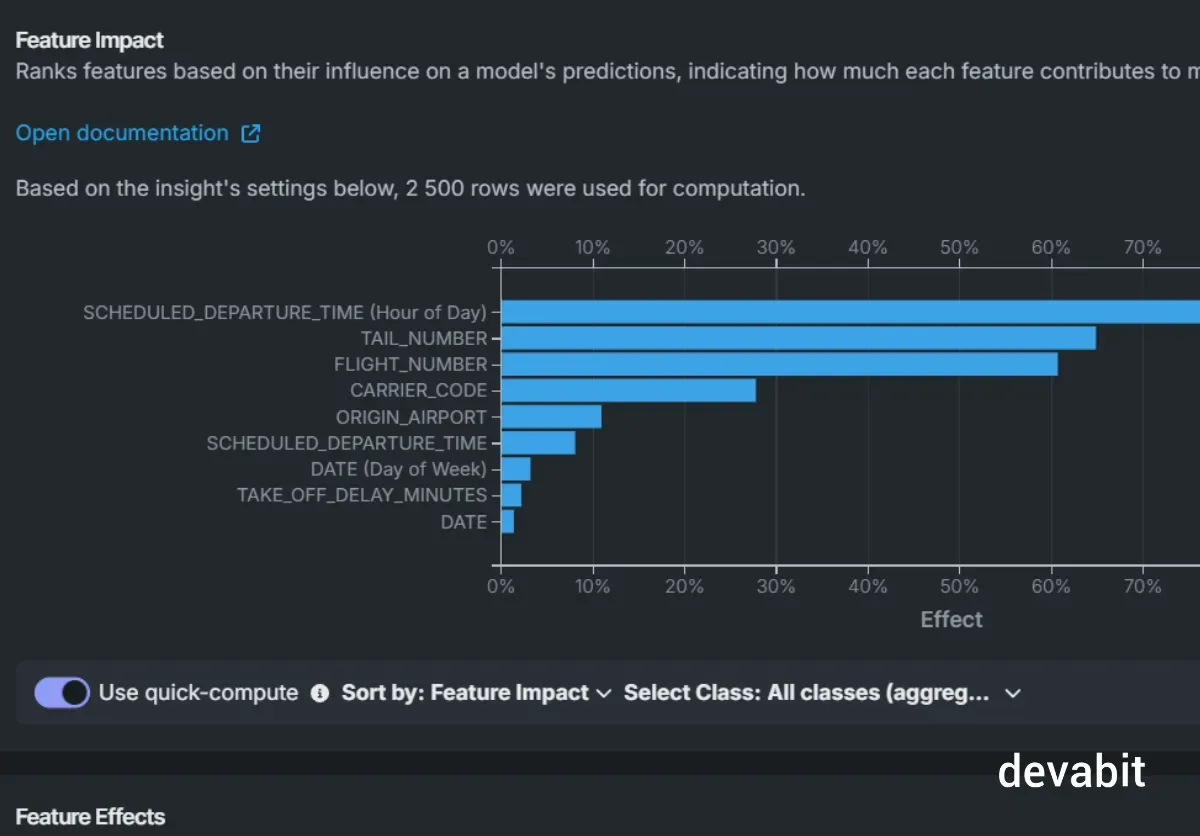

Once you click Regularized Logistics Regression (L2), you can see a set of functions to explore. Let's try the FEATURE IMPACT to see how the XAI can help us identify to what degree our chosen feature would affect the flight delay times.

Step 6: Compute & Get the Code

In the FEATURE IMPACT section, we click on the COMPUTE button to receive automatically-generated visualized calculations of our chosen features' impact with detailed percentage bars showcasing the overall statistics.

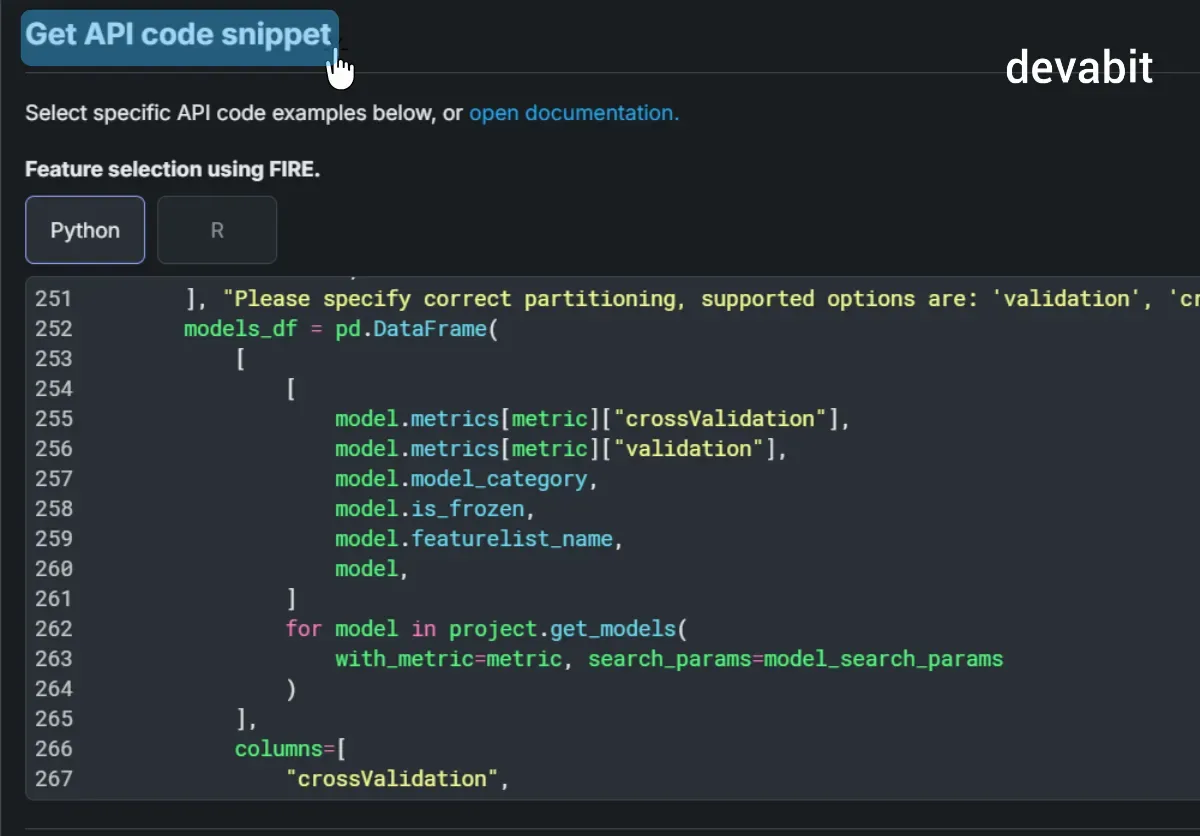

But visualization is just the peak of the XAI iceberg, as now we can click on GET API CODE SNIPPET to receive an all-in-one code snippet in Python or R that can be easily copied and integrated into your solution.

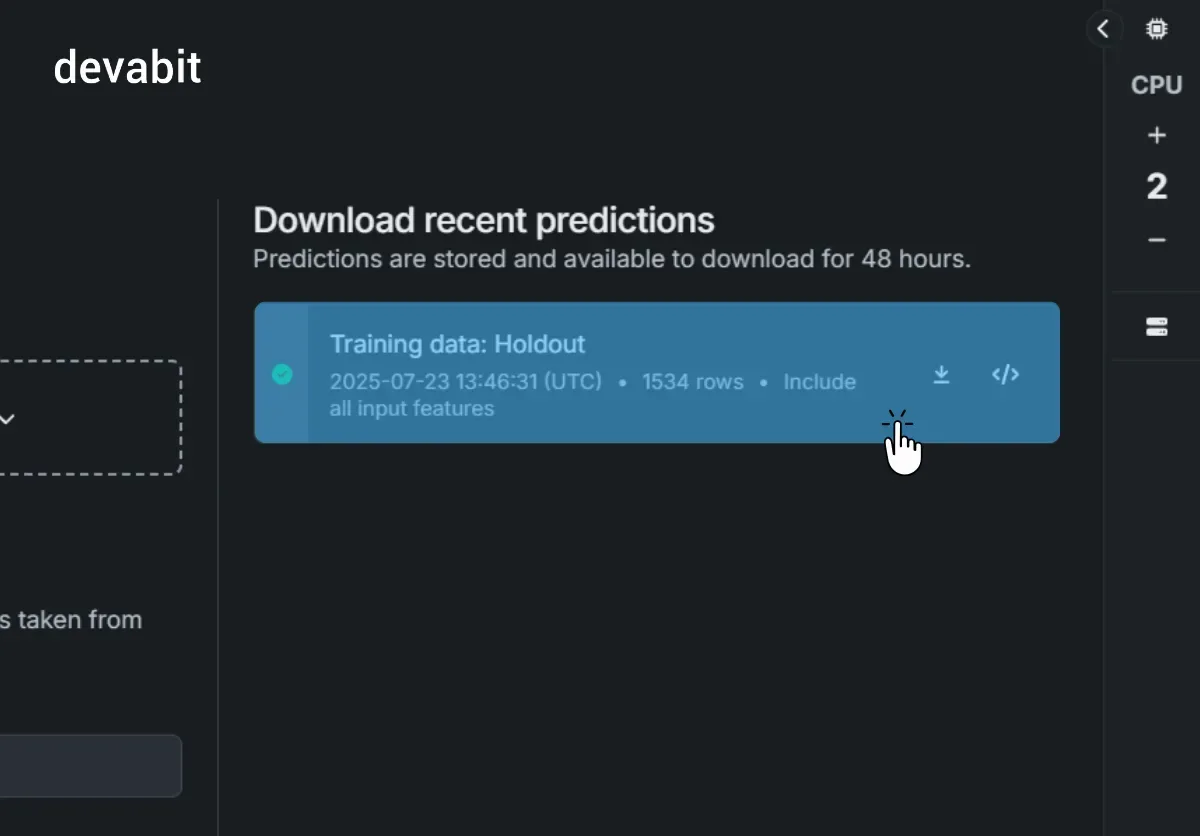

Step 7: Make Predictions

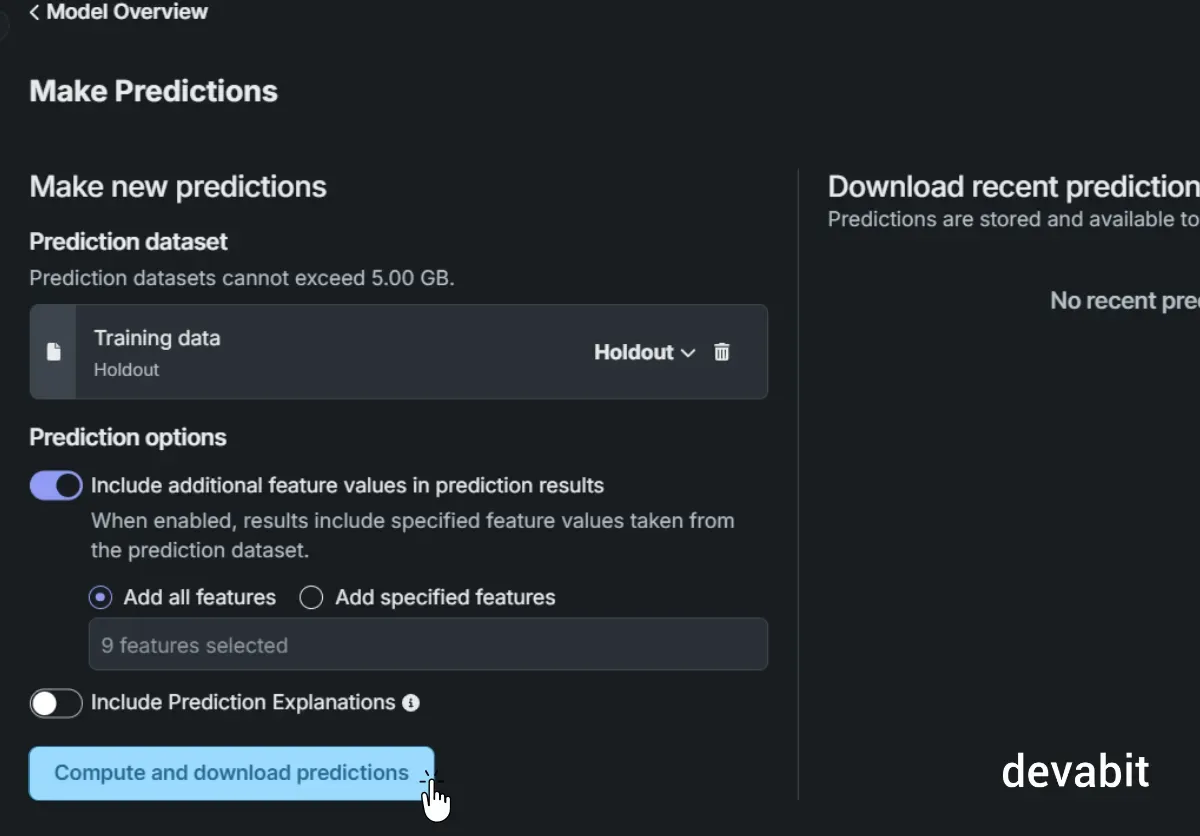

If you seek more thorough data analysis and want the Explainable AI to make specific predictions, try the MAKE NEW PREDICTIONS button, choose or upload the data you would like to work with, and do not forget to hit the INCLUDE PREDICTIONS EXPLANATIONS switch.

Once the calculations are done, you can upload the results in a CSV file and take a look at the detailed table with the input data and XAI-generated predictions.

And you are all done! After training, you will get access to such powerful XAI tools as: Feature Impact: shows how much each input feature contributes to the model's predictions.

- Prediction Explanations: for each row, tells you exactly why the model predicted what it did.

- Feature Effects: visual charts that show how changing one feature affects predictions.

- Confusion Matrix: helps explain how well your model is performing for each class.

- API Code: creates a full-set Python or R code tailored to any data or request you upload.

At this point, you no longer have to ask "What is XAI?". You are now ready to dive even deeper into how to integrate Explainable AI into your business to make it precise, flawless, automated, and future-driven. And if you feel like you are ready to move over the "what is XAI" stage, check the most frequently asked questions about Explainable AI answered by our experts below!

devabit Answers FAQs about Explainable AI

Why do we need explainability in AI models?

In a nutshell, we need explainability in AI models for ensuring data and prediction transparency, precision, fairness, and regulatory compliance, but there are also several deeper reasons, such as:

- Trust and Transparency: Explainable AI helps users understand how decisions are made, building trust in the system and reducing uncertainty.

- Accountability: in critical sectors like healthcare or finance, understanding AI's decision-making is essential for ensuring accountability and justifying outcomes.

- Bias Detection: Explainable AI helps identify and address potential biases in AI models, ensuring fairness in decisions.

- Regulatory Compliance: many regulations require transparency in automated decisions. Explainability ensures that AI systems comply with legal standards.

- Improvement and Optimization: by understanding how a model works, developers can optimize its performance and make it more accurate.

- Ethical Decision-Making: Explainable AI ensures that decisions align with ethical standards, especially in sensitive applications like autonomous vehicles or hiring.

- Human-AI Collaboration: explainability allows people to work effectively with AI systems, trusting its suggestions while using their own judgment.

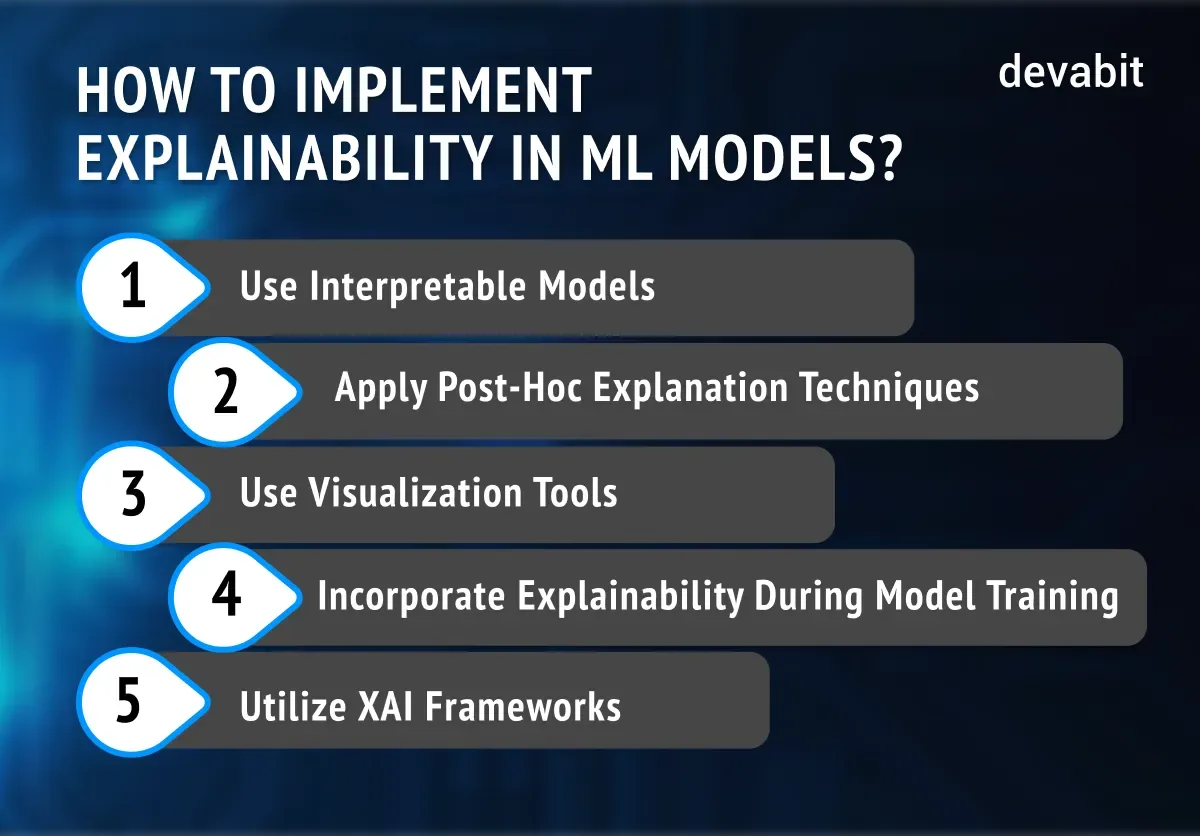

How do you implement explainability in machine learning models?

Implementing explainability in machine learning models can enhance transparency and trust in AI systems. Here are key strategies you can implement to achieve this:

- Use Interpretable Models

Opt for inherently interpretable models, such as decision trees, linear regression, or logistic regression. These models allow you to easily understand the relationships between input features and predictions, making it easier to explain their behavior.

- Apply Post-Hoc Explanation Techniques

For more complex models like deep learning or random forests, use post-hoc explanation techniques. These methods help interpret the outcomes of "black-box" models. Popular techniques include LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations), which provide insight into how individual features contribute to predictions.

- Use Visualization Tools

Visualization tools can be valuable in understanding machine learning models. Techniques like partial dependence plots, feature importance plots, and saliency maps allow you to visually explore how different features affect predictions and model behavior, making it easier to explain results to non-experts.

- Incorporate Explainability

During Model Training Consider explainability as part of the model training process. This involves selecting features, designing models, and tuning hyperparameters with interpretability in mind. Using simpler models or constraining model complexity can help maintain transparency while still achieving good performance.

- Utilize XAI Frameworks

XAI frameworks provide tools and methods specifically designed to improve explainability. Popular frameworks like IBM's AI Explainability 360, Google's What-If Tool, or Microsoft's InterpretML offer a range of approaches for making machine learning models more understandable and accessible.

What are SHAP and LIME, and how do they work?

SHAP (SHapley Additive exPlanations) and LIME (Local Interpretable Model-agnostic Explanations) are both popular technologies for explaining the predictions of machine learning models, particularly those that are complex and often described as "black boxes." In a nutshell, both of these models serve to help the Explainable AI actually explain how it has come up with a particular result. But there are several crucial differences between them:

- SHAP (SHapley Additive exPlanations)

SHAP is a method based on Shapley values, a concept from cooperative game theory. The idea behind SHAP is to measure the contribution of each feature to the XAI model's prediction by considering all possible combinations of features. In simple terms, it calculates how much each feature adds (or deducts) from the model's prediction.

SHAP can be applied to any machine learning model, even complex ones like neural networks and ensemble methods. And most importantly, SHAP provides global (overall model) and local (individual prediction) explanations, making it highly flexible and interpretable.

- LIME (Local Interpretable Model-agnostic Explanations)

LIME focuses on providing local explanations for individual predictions. It works by approximating the complex model with an interpretable, simpler model (like a linear model) in the local region around the specific prediction of interest.

LIME selects a specific data sample and perturbs the input features to generate synthetic data around that instance. It then trains an interpretable model (like a linear regression or decision tree) on this perturbed data.

LIME works well for understanding individual predictions but may not provide as much global insight into how the model behaves across the entire dataset.

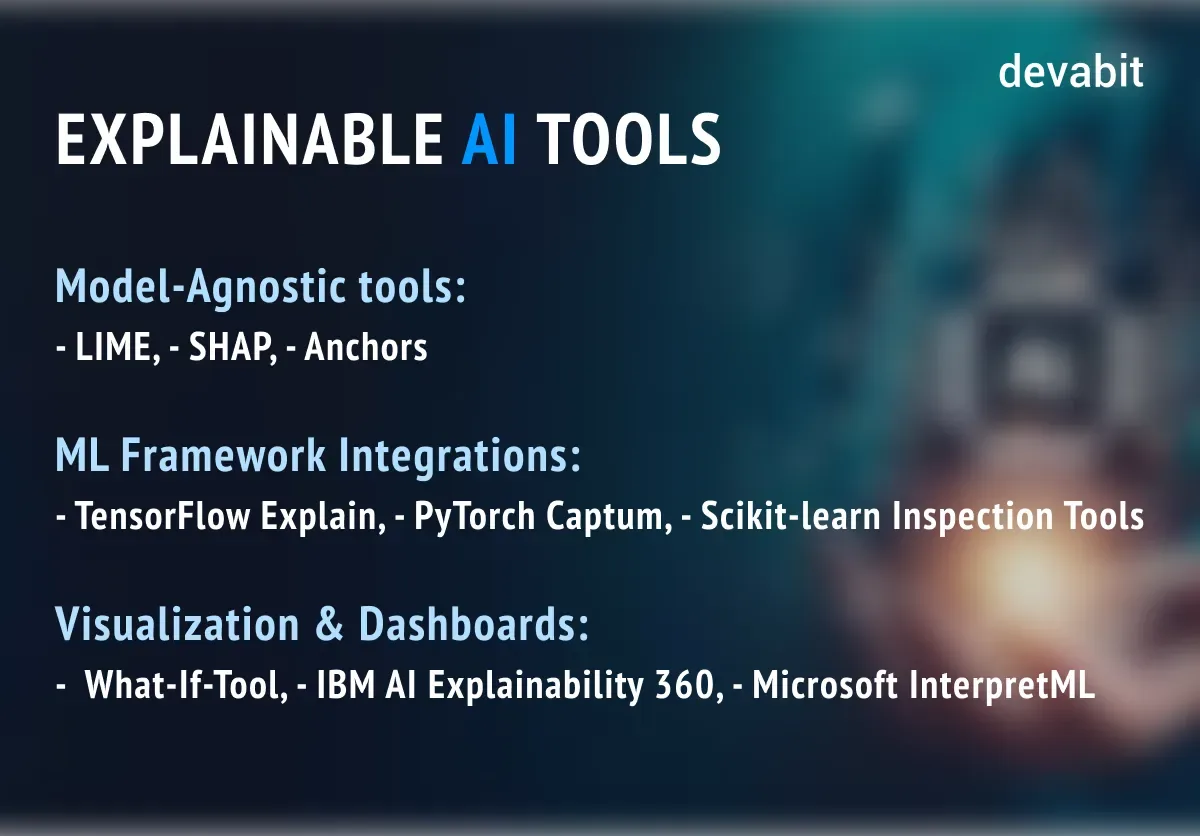

What tools are commonly used for XAI?

The tools we use in Explainable AI are divided into three main groups, and these include:

Model-Agnostic Tools:

- LIME (Local Interpretable Model-agnostic Explanations): a robust tool that aims to explain individual predictions of complex models by approximating them with a simpler, interpretable model.

- SHAP (SHapley Additive exPlanations): a widely-used model-agnostic tool, which assigns each feature an importance value that reflects how much it contributes to a model's prediction.

- Anchors: a relatively newer model-agnostic explanation method that provides high-precision rules, called “anchors,” which are aimed at making a model's prediction.

ML Framework Integrations:

- TensorFlow Explain: a XAI tool that provides a suite of visualization techniques for explaining the behavior of complex models, such as deep neural networks.

- PyTorch Captum: provides several methods for understanding how models arrive at decisions by attributing predictions to individual input features.

- Scikit-learn Inspection Tools: provides a range of interpretability tools that work with traditional machine learning models (like decision trees, linear regression, and support vector machines).

Visualization & Dashboards:

- What-If-Tool: a Google-developed tool that lets users interact with a trained machine learning model by exploring different data inputs and immediately seeing how the model’s predictions change.

- IBM AI Explainability 360: an IBM toolkit that integrates a range of explainability techniques that allow users to explore both model behavior and data bias.

- Microsoft InterpretML: the tool that focuses on making complex models interpretable through both global and local explanation methods.

Do users trust AI more when explanations are available?

In short, yes! The cornerstone of Explainable AI's effect is gaining trust in AI-generated results. Even though AI is a relatively "young" technology, it has pushed through a long way from AI hallucinations and fictional resources to being one of the most trustworthy sources according to thousands of users. However, according to Accenture, 83% of consumers would be more comfortable with AI if it provided clear explanations for its decisions. And here is why XAI has entered the stage. There are several reasons showcasing to us why people tend to trust Explainable AI more than regular AI, and here they are:

- Increased Transparency: the lack of understanding of how exactly the AI has come up with certain results leads to doubts in its reliability or even complete denial of its trustworthiness. In contrast, Explainable AI does not gatekeep and lets the user delve deep into the process of decision-making.

- Reduced Risk Chance: when it comes to implementing AI into such vital areas as health, law, education, or psychology, Explainable AI is making sure the users are aware of every step it takes to produce dependable outputs.

- Perceived Fairness: Explainable AI serves to ensure that the results you receive are unbiased, and the explanation of why a particular decision was made, and how each data sample affects the final result, increases the general level of user trust in outcome fairness.

Summing up, Explainable AI is our huge step towards AI being the most reliable, fair, and trustworthy source for decision-making. Along with XAI improving its explainable abilities, more and more people will never doubt AI-generated information.

AI Evolution: What Is Next?

AI is quietly becoming the connective tissue of next-gen experiences, and nowhere is this clearer than in healthcare. Conversational AI is stepping in as a digital front desk and assistant, guiding patients through symptom checks, scheduling visits, explaining lab results, and nudging them to follow treatment plans. It doesn't replace clinicians; it filters noise, surfaces what matters, and keeps human experts focused on complex decisions and empathy-driven care. Read more here.

The same shift is happening in how we build the world around us. AI-powered 3D modeling turns rough ideas, text prompts, sketches, and simple concepts into usable 3D assets for medical devices, training simulators, hospital layouts, and beyond. Instead of weeks of manual modeling, teams can iterate in hours, test more options, and design environments and products that are safer, more ergonomic, and tailored to real human needs. Read more here.

Underneath both stories is a bigger trend: AI is evolving from a back-end analytics tool into a creative and collaborative partner. New waves of multimodal, generative, and edge AI let systems see, talk, design, and react in real time, whether they’re helping a patient at home or powering a 3D prototype in a design lab. It's all one movement: using AI not just to optimize processes, but to reshape how we imagine, design, and deliver human-centered experiences. Read more here.

Why Listen to devabit?

During over the decade of building custom software solutions and consulting for leading IT industry players, our top-rated web development company in Europe has gained best-in-class insights on seamless AI implementation into any business. We do not just tell clients what is XAI; We make them see it firsthand via our brightest solutions.

devabit has helped numerous companies, start-up projects, and enterprise-level organisations overcome their tech boundaries and bring innovation in-house. If you want to see how our custom development company built an AI-driven automotive SaaS solution empowering the leaders of US distributors, check this out.

Do not hesitate to make your next big step towards Explainable AI and contact us or check our extensive consulting services here!

Recent Publications

Don't miss out! Click here to stay in touch.

Discover More AI Content

Relevant Articles View all categories

View all categories CONNECT WITH US WE’RE READY

TO TALK OPPORTUNITIES

THANK YOU! WE RECEIVED YOUR MESSAGE.

Sorry

something went wrong