devabit vs ChatGPT: Agentic AI Governance Development Battle

The rise of machines has abolished. At least for now. And some of you would ask why, and would be quite fair. Cause everyone today seems to have at least one friend who is afraid AI will take their job in the near future. And if you do not have such a friend, maybe it's you? But at devabit, every single one of our employees sleeps like a baby at night knowing AI is not even close to taking their positions. Why are we so sure? We are here to tell you exactly why artificial intelligence, conversational models, and chatbots are actually getting dumber despite higher response speed and seeming to be all-knowing. As an example, we took a niche agentic AI governance topic and asked a premium version of ChatGPT 5.2 questions that most of our clients ask us daily. And you will see how the power of artificial intelligence is beaten by real-life expertise right below. Do not miss the chance to look fear in the eyes and forget everything you knew about AI before.

Overall, we will discover the following topics:

- Is it true that conversational AI models are actually getting less competent?

- Yes, it is true that conversational AI models, like ChatGPT, are becoming less competent in some areas despite faster response speeds. This is largely due to training on AI-generated data, which can be inaccurate, biased, or even hallucinated. While these models are trained on massive datasets that include human-generated content, they are increasingly being exposed to AI-generated content, which often lacks the quality control of real-world data.

- What is agentic AI governance?

- Agentic AI governance refers to the framework of engineering controls and policies that govern AI systems capable of performing actions in the real world, like sending emails, triggering workflows, or making financial transactions.

- What AI business consulting services does your company deliver?

- At devabit, we offer comprehensive AI business consulting services to help organizations integrate AI technologies safely and effectively. Our services include:

- AI Strategy and Roadmapping: helping businesses define their AI goals and create a clear, actionable implementation plan.

- AI Compliance: establishing robust frameworks for AI model oversight, data privacy, and ethical AI practices.

- Agentic AI Implementation: setting up operational controls for AI systems that take actions, ensuring they are safe, predictable, and compliant.

- AI Model Training and Optimization: custom AI solutions tailored to your business needs, improving accuracy, efficiency, and performance.

- Risk Assessment and Mitigation: identifying potential risks associated with AI adoption, including bias, security vulnerabilities, and operational failures.

- At devabit, we offer comprehensive AI business consulting services to help organizations integrate AI technologies safely and effectively. Our services include:

- How AI Is Actually Getting Less Smart

- We Asked ChatGPT about Agentic AI Governance & Proved It Wrong

- 01 / What is agentic AI governance, and how is it different from "normal" AI governance?

- 02 / What permissions model works best for AI agents (RBAC, ABAC, scoped tokens)?

- 03 / How do we prevent an autonomous agent from doing harmful or expensive actions?

- 04 / How do you test an agent safely before deployment (red teaming, simulations)?

- 05 / How do we start an AI governance programme from zero?

- ChatGPT vs. devabit: What Is the Score?

How AI Is Actually Getting Less Smart

So, before jumping right into our devabit vs. ChatGPT battle, let's make it clear: why is AI actually getting less smart, not vice versa? Cause now it seems like ChatGPT knows more than Google, simplifies any of our daily routines, acts like a doctor, teacher, therapist, or personal coach, and relieves parents from answering their children's tricky questions.

And fair enough to doubt if artificial intelligence is becoming less competent than it used to be. But here is the harsh truth:

- Conversational AI models, such as ChatGPT, often sacrifice the quality and accuracy of their answers for the instant response speed users require. Only a year ago, AI models were trained on human-generated data, like books, articles, scientific research papers, and personal user experience. But now, the situation is sharply different. The users write pieces of content via AI without any fact-check or proofreading, post them online, and automatically give AI models access to this data for their further training. Simply stated, artificial intelligence is now being trained on AI-produced data, even if it is fake, inaccurate, or even hallucinated. Sounds sad, doesn't it? What's even worse, if the creators of AI models do not block it from training on AI-generated data (which is technically impossible), we will soon observe the model's collapse.

- User preference over accuracy. Large Language Models (LLMs), such as ChatGPT, were pre-trained on a huge data set and taught to produce the most accurate and data-driven answers based on solid facts and data. But the key detail — were. With each update, the models were trained to align their answers more to specific user preferences in the name of being helpful, harmless, or even empathetic. Meaning that LLMs now tend to choose the option/answer that is likely to be liked by you, no matter how truthful it is. Not in all cases, but are you truly ready to risk your reputation in this way?

- Hallucinations are getting business-harmful. Yes, we are all familiar with made-up resources, small facts, or stories, but how about huge discounts and promo codes your company never actually offered? Here is the reality. Small business in England with an AI chatbot for their website, and the customer spent hours asking it for a discount that went from 25% off to an unbelievable 80%! And when the business owner refused to confirm it, the customer threatened to file a small claims court case. Now AI does not sound this fun, doesn't it?

If that is still not enough to prove our statement, it is the right time to move straight to the agentic AI governance topic.

We Asked ChatGPT about Agentic AI Governance & Proved It Wrong

01 / What is agentic AI governance, and how is it different from "normal" AI governance?

ChatGPT 5.2 Answers:

devabit Answers:

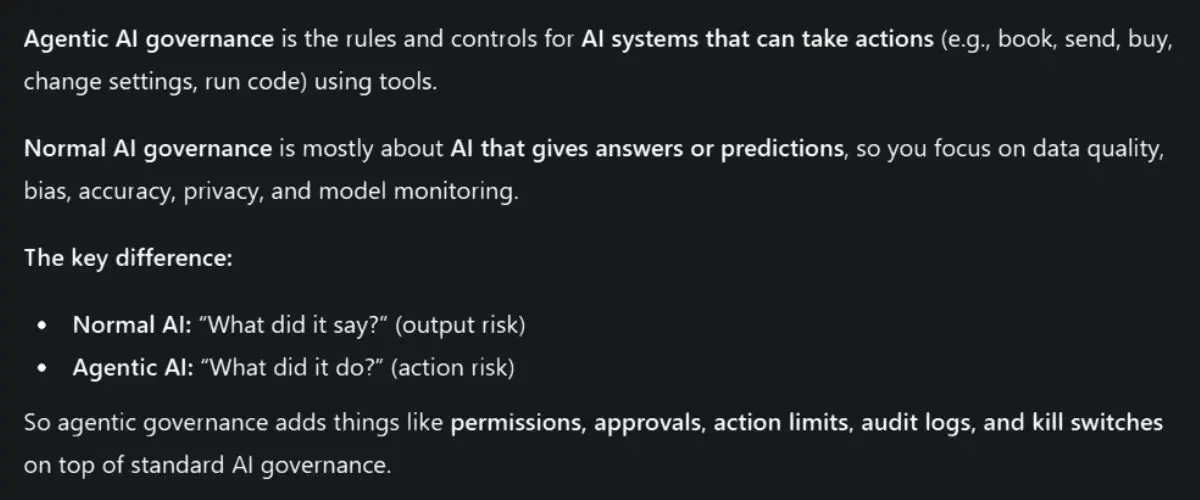

Quite close, but that definition is not as nearly precise and clear as most of our clients expect it to be. In reality, even experienced entrepreneurs hesitate about AI implementation, especially in such vital and sensitive industries as Healthcare, Governance, Education, or Fintech. So, having a transparent understanding of what agentic AI implementation is serves as the first step towards making your business client-oriented and unique among thousands of niche competitors. Our definition looks like this:

Agentic AI governance is the set of engineering and policy controls we put around AI systems that can take actions in your environment, call APIs, update records, trigger workflows, or spend resources, so they operate safely, predictably, and auditably.

"Normal" AI governance mainly covers model and data risk (quality, bias, privacy, documentation, monitoring). Agentic governance adds operational control over execution, specifically:

- permissioning (least-privilege tool access);

- approval gates for higher-risk actions;

- hard limits (spend, volume, scope);

- end-to-end logging of tool calls and outcomes;

- incident controls (alerts + kill switch/rollback).

02 / What permissions model works best for AI agents (RBAC, ABAC, scoped tokens)?

ChatGPT 5.2 Answers:

devabit Answers:

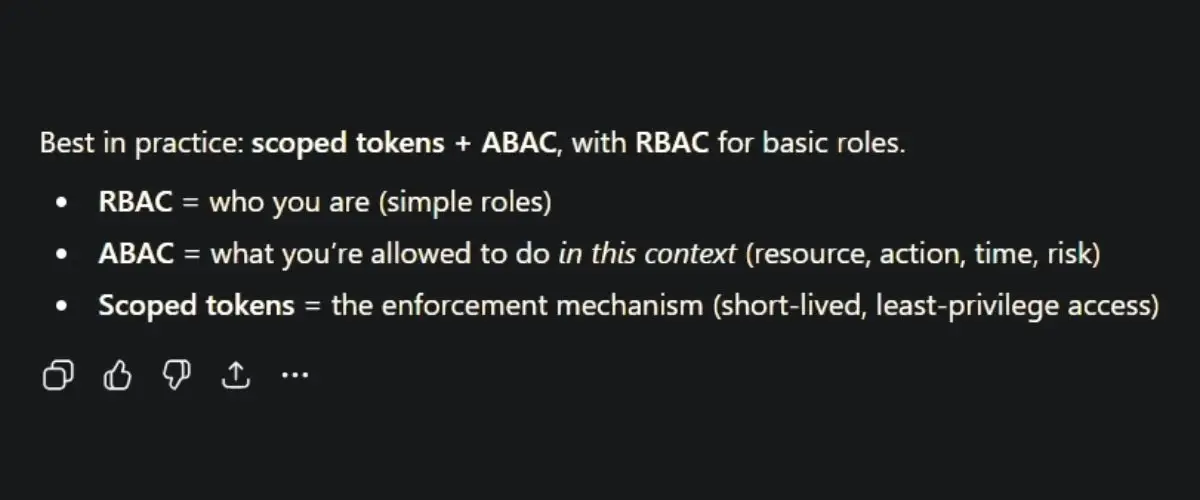

In fact, there is no one "best" model on its own. The best setup is the one that keeps an agent useful without turning it into a silent admin account for you. In practice, the most reliable pattern is: RBAC for the coarse boundary + ABAC for the real rules + scoped tokens to enforce it.

- RBAC answers: "What kind of actor is this?" (support agent, finance agent, HR agent). It is simple and gives you a clean starting perimeter.

- ABAC answers the governance question you actually care about: "Is this specific action allowed right now, on this specific resource, for this specific reason?" Example: "Can update customer address only for accounts in region X, only during business hours, only when ticket severity ≥ 2, only if the change is under a risk threshold."

- Scoped, short-lived tokens make it real: the agent does not have permission in theory, it gets a temporary, narrowly-scoped credential for exactly what it is about to do.

03 / How do we prevent an autonomous agent from doing harmful or expensive actions?

ChatGPT 5.2 Answers:

devabit Answers:

To prevent an autonomous agent from doing harmful or expensive things, you want to shrink its "blast radius" and make risky actions hard to execute by accident.

- First, do not grant broad account access. Use short-lived, tightly scoped tokens so it can do one specific task and nothing else. Anything irreversible or costly (payments, refunds, deletions, mass emails) should require human approval or a two-step confirmation.

- Add pre-flight checks, and before executing, run simple policy rules: "Is this in the allowed domain?", "Does it touch sensitive data?", “Is the recipient external?” If yes, block or escalate.

- Watch for anomaly patterns and alert when behaviour deviates from normal, like sudden bursts of actions, new vendors, unusual time of day, repeated failures.

- Always have a kill switch to instantly disable tool access and pause runs, with clear ownership for incident response.

Simply stated, all of the above-mentioned measurements should be implemented by your agentic AI governance development partner that guides you through all the steps of agent implementation, from an idea and launch to post-deployment handling. And that is exactly what our company deals with.

04 / How do you test an agent safely before deployment (red teaming, simulations)?

ChatGPT 5.2 Answers:

devabit Answers:

Testing AI agents is a momentous step in an AI implementation process, especially when working with such industries as governance. And, in order to test an agentic AI governance safely, we treat it like we are testing a new junior employee with admin tools: start in a controlled environment, try to make it fail on purpose, and only then give it real access.

- Mirror production, but with fake data and mocked tools (or read-only access). Check every tool call, what it changed, and whether it followed your rules (permissions, limits, approvals).

- Feed it hundreds of realistic workflows plus edge cases: missing info, contradictory instructions, timeouts, and partial failures.

- Actively attack it with prompt injection via web pages or documents, social engineering, data-exfiltration attempts, and escalation tricks.

- Start with draft-only, then human-approve execution, then limited auto-execution for low-risk actions, then expand.

- Keep a kill switch and rollback paths from day one.

05 / How do we start an AI governance programme from zero?

ChatGPT 5.2 Answers:

devabit Answers:

It is a question 90% of our consultations start with. And to be fair, for some of our clients, it may sound a bit scary and undefined, cause no one likes to start from scratch. No one but devabit. At our company, we specialize in building custom software development products from the bottom up. And here is how we approach launching an AI governance programme for our clients:

First, we define clear ownership. Our company identifies a project lead and key stakeholders across relevant departmentsб such as legal, compliance, security, and product, who will champion and oversee the governance framework.

Next, we assess your current AI landscape. Our team begins by identifying what AI systems and use cases you already have in place or plan to deploy. This includes evaluating third-party vendors and understanding where AI impacts business operations.

devabit then classifies AI risks. Not all AI projects are equal, so our employees categorize your use cases based on their potential risks, whether it is data privacy, ethical concerns, or operational impact, and create a tailored governance strategy for each.

After that, our experts implement monitoring and reporting systems. We set up metrics and automated systems to track AI performance, output quality, compliance, and potential risks in real-time. This helps with early detection of issues and ensures AI models are behaving as expected.

Finally, our quality assurance experts test and iterate. We launch the programme with a pilot project, gather feedback, and make continuous improvements to refine the process as we scale it across other AI initiatives.

And here is what brings real clarity and reassurance for our clients who are still hesitating to implement an AI upgrade into their business processes.

ChatGPT vs. devabit: What Is the Score?

At the end of the day, would you still entrust your software development consulting or assistance to even the most innovative AI model? We are not so sure about that. In contrast, at devabit, we specialize in delivering AI consulting and development services that empower businesses to harness AI effectively while ensuring strong governance. While we admit the undeniable power of artificial intelligence, we have come through more than enough AI projects to now clearly state that AI is not a panacea, and when it can be your best buddy in some cases, it can still be a real backstagger when you least expect it.

Our AI business consulting services exist to help small, medium, and enterprise-level businesses realistically understand their AI implementation ambitions and the best opportunities we can reach together. And as soon as you get an expert look at your internal processes, you will never lower your standards to late-night messages like "Answer shorter", "I asked for the picture in PNG format", and "Make it simpler" again. Contact Us to get a free quote on your AI implementation idea by devabit experts, and let's cancel the machine rise together.

Recent Publications

Don't miss out! Click here to stay in touch.

Interested in AI?

Relevant Articles View all categories

View all categories CONNECT WITH US WE’RE READY

TO TALK OPPORTUNITIES

THANK YOU! WE RECEIVED YOUR MESSAGE.

Sorry

something went wrong