Edge AI Computing: Everything You Need to Know from A to Z

From managing complex manufacturing processes to writing daily emails, AI assists companies in so many ways that it has literally become an inseparable part of our routine (spoiler alert: the trend for edge AI computing brings the concept of artificial intelligence even closer, both physically and theoretically). While billions of users rely on AI expecting "here and now" outputs, the dependence on cloud computing and a stable internet connection can be a severe issue, especially when it comes to vulnerable domains like healthcare, logistics, and automotive. Here, edge AI computing comes into play. According to International Data Corporation, $261 billion was spent on edge computing solutions globally in 2025, and this number is projected to grow over the years. Yet, why are edge AI computing solutions gaining momentum? What benefits can edge AI computing bring to the modern business ecosystem? These and many more questions we are going to answer in today's article. Read on to find out:

- Edge AI computing literally brings artificial intelligence to the edge, storing the systems close to the device itself instead of relying on the distant cloud.

- The growing adoption of the Internet of Things (IoT), 5G, and AI-optimized processors contributes to the rapid evolution of edge AI computing.

- Speaking of edge AI computing solutions, businesses should prioritize edge hardware and devices, a specific software stack, and management and orchestration platforms.

- Edge AI computing can be implemented within 6 coherent steps: identify opportunities, prepare your data, focus on target architecture, build a stable technology foundation, prototype and industrialize.

- Edge AI computing in healthcare has promising potential: instead of constantly sending raw patient data like medical imagery or vital signs to a remote cloud, the edge AI computing device itself performs the majority of the computation and only sends what is needed currently.

- Edge AI automotive intelligence efficiently processes sensitive data, minimizes exposure risks, and enables real-time threat detection.

- Our edge AI expertise covers Event / Incident Detection, Predictive Maintenance & Diagnostics, Infotainment / Personalization / Voice Assistants, V2X / Cooperative Perception / Shared Mapping, Road / Surface Condition Monitoring / Anomaly Alerts.

- Edge AI Computing Definition: What Does Edge AI Mean in Simple Terms?

- What Is the Secret Behind the Popularity of Edge AI Computing?

- Edge AI Computing Solutions Overview

- How to Implement Edge AI Computing: A Step-by-Step Guide

- Edge AI Computing Implementation Challenges & Best Practices

- Edge AI Computing Statistical Overview

- Edge AI Computing Across Various Industries

- Our Edge AI Computing Expertise

- The Future of Edge AI Computing: What Is Next?

Edge AI Computing Definition: What Does Edge AI Mean in Simple Terms?

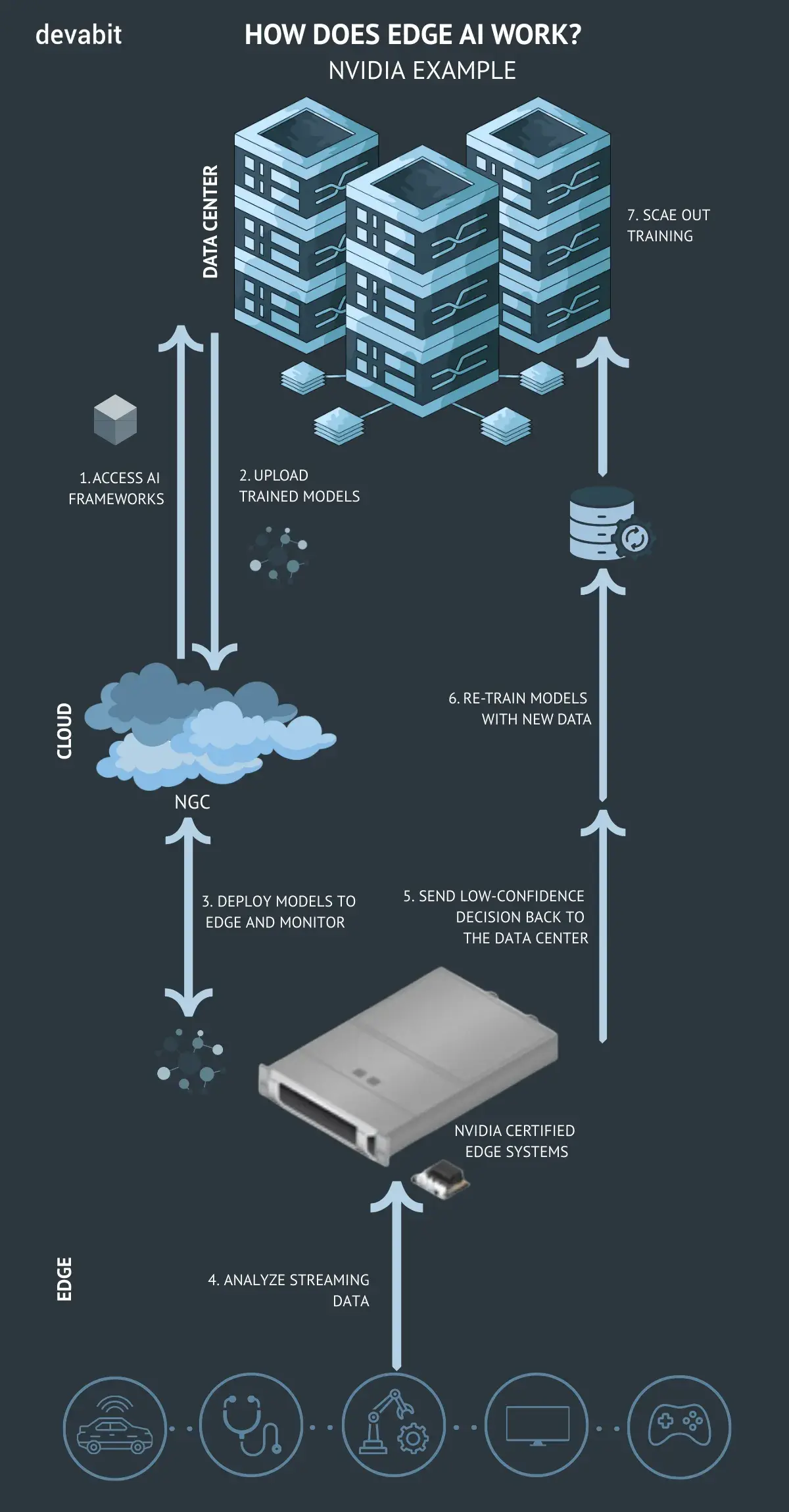

Unlike traditional AI, which relies mostly on cloud computing, edge AI computing refers to the concept of on-device execution, which means AI models and algorithms are deployed directly on edge devices like IoT sensors. The difference? No internet dependence and immediate data processing. Since data are stored close to the device itself, AI processes them at the network edge within milliseconds (!). From autonomous cars and security cameras to smart homes and wearable devices, edge AI is literally everywhere, being a silent yet powerful provider of real-time answers. In the business environment, edge AI computing enables companies to deploy intelligence directly where operations require it, at the exact moment of need. The ability to operate in real-time environments with limited bandwidth makes edge AI computing essential for businesses that need to stay competitive.

What Is the Secret Behind the Popularity of Edge AI Computing?

Bet you have ever dreamed of having a full-package smart home, with all these innovative sensors and ultra-sensitive cameras everywhere. Well, that is actually the answer. With the rapid evolution and growing popularity of the Internet of Things (IoT), primarily used in smart homes, the need for interconnected edge AI devices has become imperative. At the same time, the new generation of cellular network, also known as 5G, provides a stable foundation for much greater bandwidth and density of connected devices. This, in turn, contributes to the growth of IoT and edge AI computing accordingly. Finally, let us pay tribute to AI-optimized processors that supply greater computing power, which is crucial for the widespread adoption of edge AI computing systems.

Edge AI Computing Solutions Overview

Speaking of edge AI computing solutions, there are three solution layers most organizations typically consider. Below, we will take a look at each of them in detail.

01/ Hardware & Devices

When it comes to hardware and devices, there are several accessible options, including:

- low-power microcontrollers;

- single-board computers;

- ruggedized gateways;

- specialized AI accelerators.

Which one is better? Well, everything depends on performance per watt and per dollar. Unlike general-purpose processors, low-power microcontrollers are optimized for dedicated applications. These include controlling sensors, actuators, hardware components, etc. Even though low-power microcontrollers offer limited computational power, they consume less energy, making them a perfect option for Internet of Things (IoT) sensors or battery-powered devices.

Single-board computers, in turn, offer multiple options such as NVIDIA Jetson series, Google Coral Dev Board, and Raspberry Pi 5 among the most popular market offerings. Still, it is essential to note that the choice depends on the types of AI tasks your solution has to perform. By way of illustration, the ASUS Tinker Edge R is an excellent choice for industrial or advanced applications, while Uni Hiker K10 can be a good entry-level solution for students and amateurs.

Ruggedized gateways serve as the smart "traffic controllers" of edge AI systems. Aimed at helping to operate in nasty environments and efficiently run AI workloads while managing fleets of devices and sensors, ruggedized gateways are powerful industrial computers. Think of it as an "edge AI computing server in a hardened box" that bridges the gap between operational technology and IT.

Last but not least, specialized edge AI computing accelerators are hardware components that provide advantages like low latency and efficient performance while saving energy expenditures. Among the most popular specialized AI accelerators, it is essential to mention Graphics Processing Units (mainly applied in robotics and smart city technology), Vision Processing Units (a great option for AR/VR imagery interpretation), and Neural Processing Units (a perfect solution for performing neural network operations with superior energy efficiency and exceptional speed).

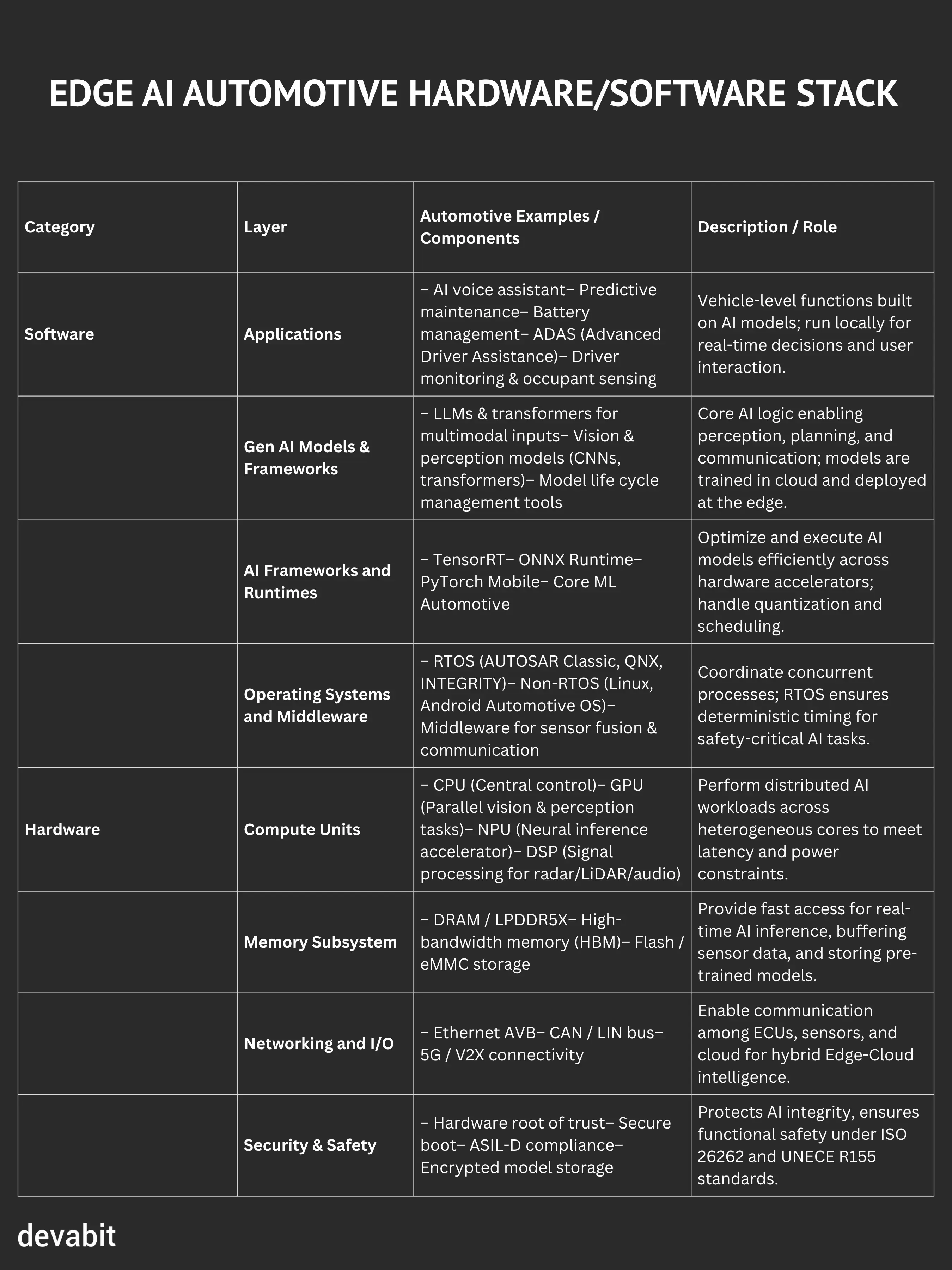

02/ Edge AI Software Stack

The software layer is associated with operating systems and runtimes optimized for embedded environments, inference engines and AI frameworks adapted to edge deployments, security modules for encryption and identity management, and connectivity layers to ensure secure communication with the cloud and other devices. See the image below to find more info on each aspect.

03/ Management & Orchestration Platforms

Since scaling is an inseparable part of growth, it is important to ensure your solution not only efficiently runs a single model on a single device but is also capable of governing thousands of distributed nodes. To make this possible, mature edge AI computing platforms are equipped with:

- centralized configuration and device inventory;

- secure OTA updates;

- continuous health monitoring;

- remote diagnostics;

- logging, and CI/CD systems.

How to Implement Edge AI Computing: A Step-by-Step Guide

In recent years, businesses have prioritized the implementation of edge AI computing as one of the most essential steps to keep pace with innovation. However, how can modern organizations efficiently implement edge AI computing while minimizing risks and accelerating time to long-term value? To help you sort things out, we have prepared a quick roadmap for implementing edge AI computing in 2026 and beyond:

01 / Look for high-impact opportunities.

First and foremost, emphasize the challenges where extra latency, connectivity, privacy, or bandwidth limitations are real pitfalls. With this in mind, it would be easier to identify where the implementation of edge AI computing can significantly boost safety, efficiency, or revenue.

02 / Make sure your data is ready.

It is essential to note that the implementation of edge AI computing does not eliminate the need for robust data engineering. Hence, ensure relevant and high-quality data sources are in place.

03 / Prioritize the target architecture.

At this point, we recommend evaluating the potential interaction between edge AI computing devices, local gateways, and cloud services to ensure smooth integration with existing systems, proper lifecycle management, and robust safety measures.

04 / Build the tech foundation.

Bearing in mind crucial factors such as long-term availability and vendor lock-in, it is time to assess the performance, certification, and operational requirements of hardware, edge AI computing frameworks, and management platforms.

05 / Prototype, then industrialize.

Initiate pilot prototype testing to evaluate technical feasibility and business impact. If everything works as planned, proceed with a production-grade rollout that includes observability, automated testing, and clear operational burdens.

06 / Edge MLOps practices.

After all, establishing edge MLOps practices is pivotal for monitoring model performance in the field, gathering feedback data during retraining and validation, and redeploying updated models safely.

Edge AI Computing Implementation Challenges & Best Practices

There are always two sides to one coin, so edge AI computing is no exception. First, let us take a look at the challenges that may arise while implementing edge AI computing:

- resource-constrained devices;

- heterogeneous fleets;

- expanded security surfaces;

- the need for cross-functional skills (embedded engineering, IT, data science, and operations).

Speaking of the top-notch edge AI computing implementation practices, it is essential to mention the following:

- starting a clear business case;

- standardizing platforms;

- building security into every layer;

- aligning edge AI computing initiatives with broader digital transformation and cloud strategies.

Edge AI Computing Statistical Overview

At devabit, we always follow the golden rule: numbers speak louder than words. In this regard, we have prepared a quick statistical overview to prove that edge AI computing truly makes a real-life impact:

- In contrast to traditional devices, edge AI computing devices cut energy consumption by up to 15-20%.

- According to Statista, the global revenue of edge AI computing is expected to reach $350 billion by 2027.

- As reported by Google Scholar, the number of papers dedicated to edge AI computing has risen from only 720 in 2015 to more than 42,700 in 2023.

- North America accounted for 38% of the global edge AI computing market revenue share in 2025.

- Meanwhile, it is projected that North America, Europe, and East Asia will generate nearly 90% of edge AI computing revenue by 2030.

- IBM states there are over 15 billion edge AI computing devices currently deployed in the field.

- The hardware segment leads the edge AI computing area, with a revenue share of more than 50% in 2024.

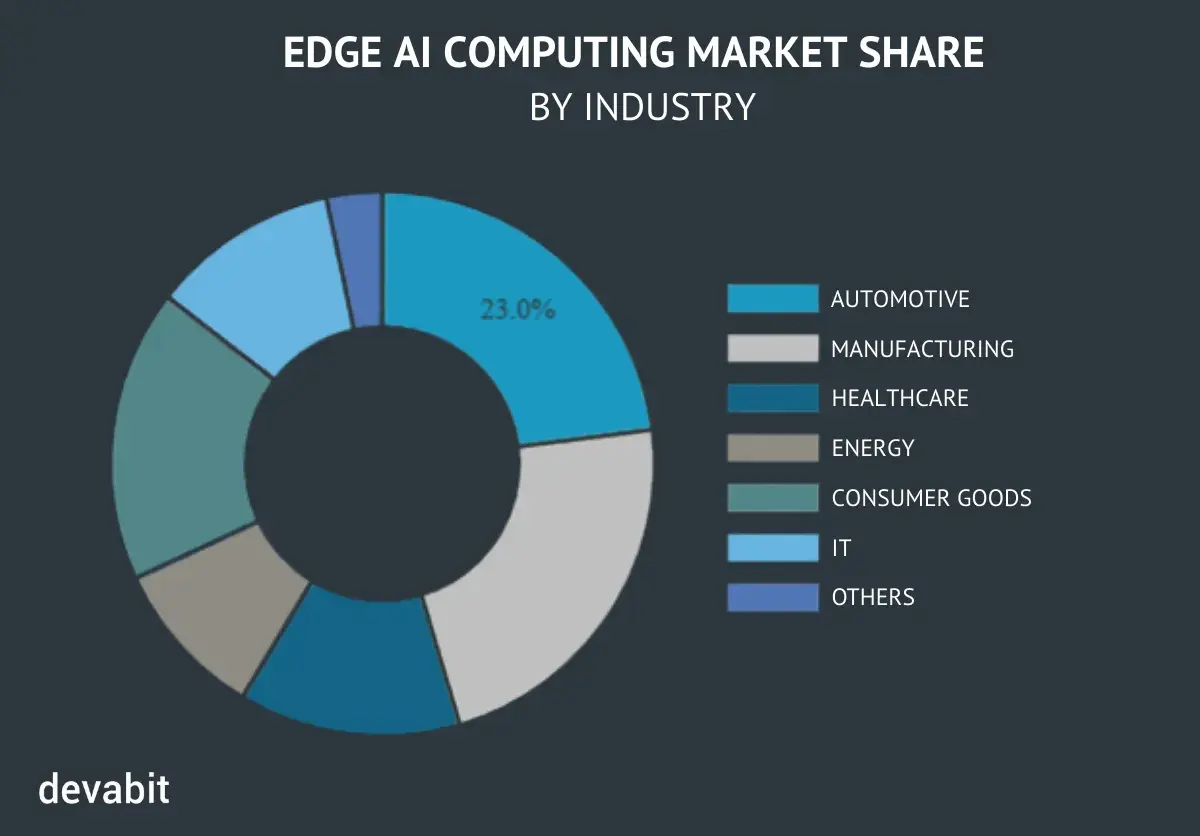

Edge AI Computing Across Various Industries

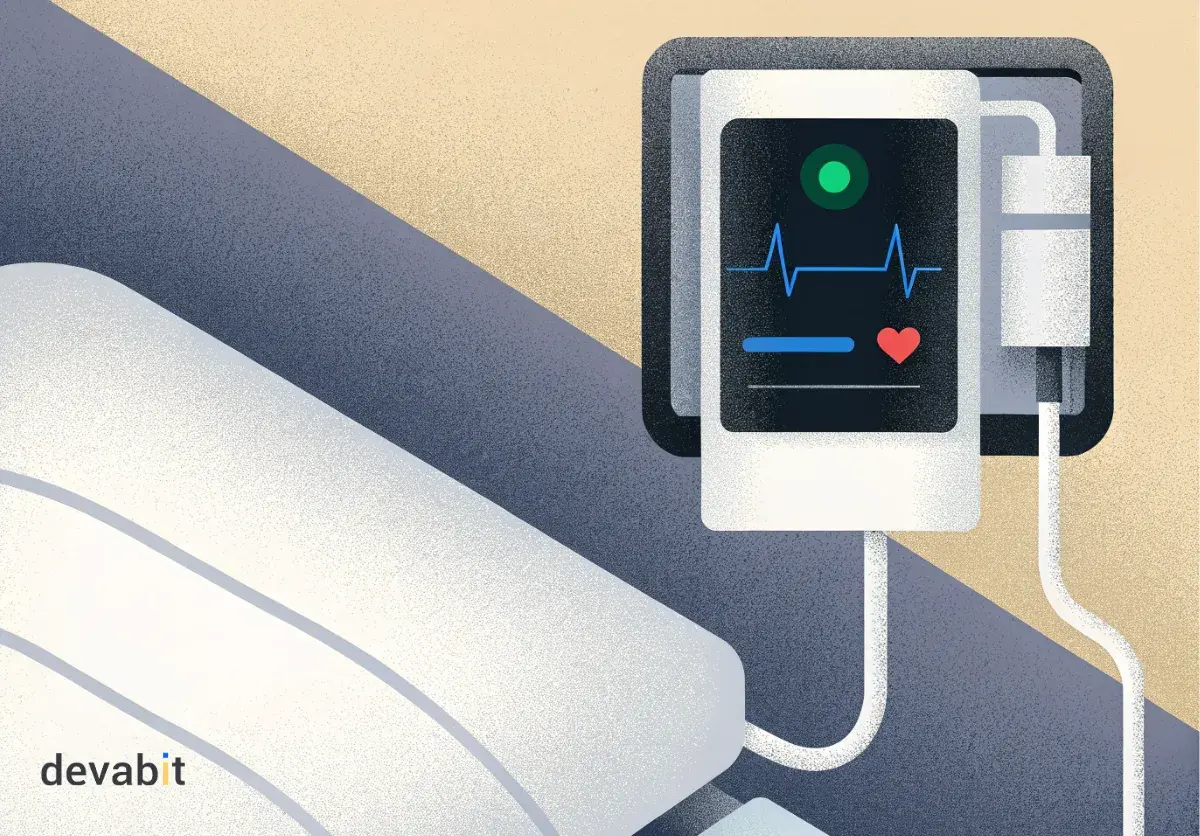

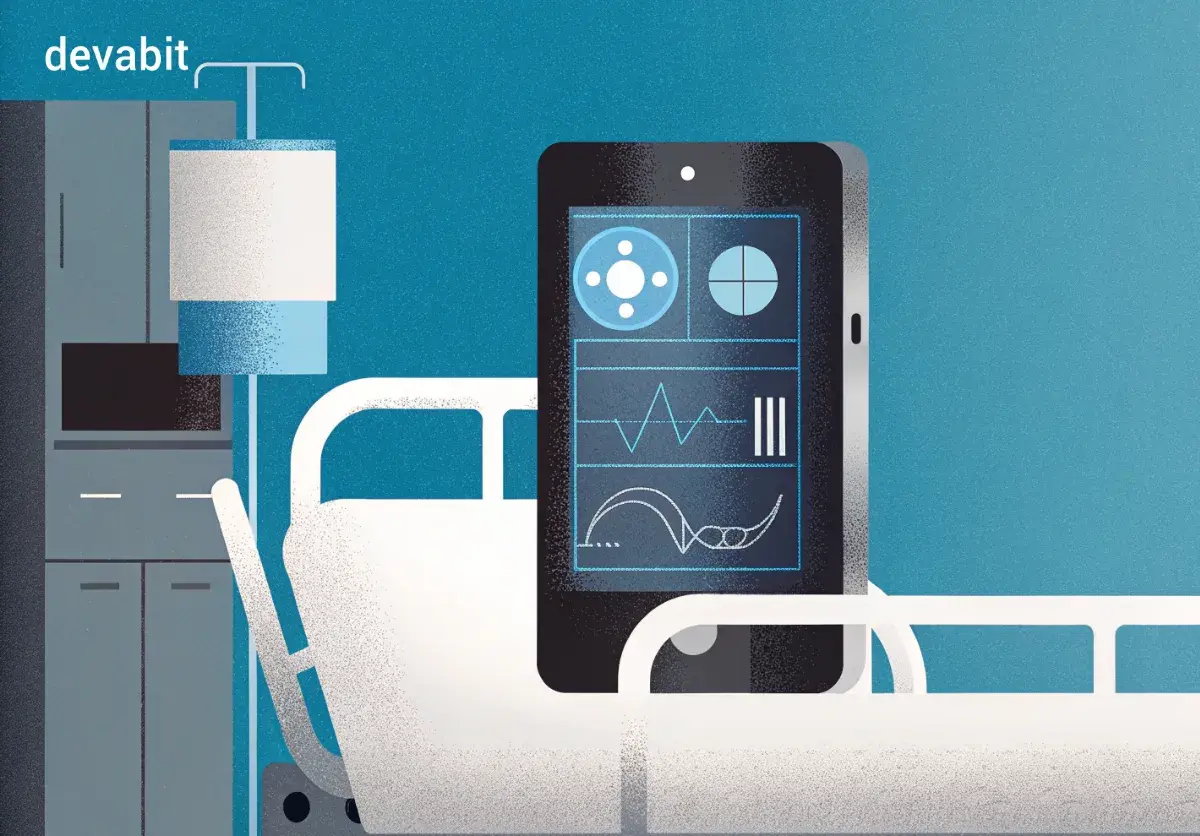

Edge AI Computing in Healthcare Industry

The use of artificial intelligence is certainly a controversial topic, especially in a domain as vulnerable as healthcare. Yet, researchers claim that edge AI computing in healthcare has promising potential, so let us take a look at what it can bring to the table for both patients and healthcare practitioners. From gathering vital patient information to generating insightful reports, modern IoT devices equipped with edge AI computing process data locally, reducing latency and enabling real-time decision assistance. Instead of constantly sending raw patient data like medical imagery or vital signs to a remote cloud, the edge AI computing device itself performs the majority of the computation and only sends what is needed currently (e.g., alerts, summaries, or de-identified data). Although edge AI computing empowers the healthcare industry by bringing computational power closer to patients and Internet of Things (IoT)-enabled medical devices, it is also associated with multiple data privacy and security constraints.

To sum up, edge AI computing can efficiently run on near medical devices and healthcare infrastructure, like:

- portable ultrasound machine;

- ICU patient monitor;

- hospital gateway;

- wearables;

- at-home diagnostic devices;

- AI-powered devices.

Edge AI Computing in Automotive Industry

Edge AI computing is an inseparable part of modern vehicles since it brings the robust computing potential directly into the car instead of relying on remote cloud infrastructure. Nowadays, we are witnessing a significant paradigm shift from mechanically driven machines to fully autonomous vehicles, and edge AI computing stands out as one of the most powerful drivers of this transformation. Over and above that, edge AI computing brings automotive cybersecurity to an entirely new level: while vehicles generate terabytes of data daily, edge AI computing solutions not only efficiently process sensitive data but also minimize exposure risks and enable on-the-go threat detection.

The top 5 edge AI computing trends in the automotive industry are:

- ultra-low latency perception & decision-making;

- hybrid edge-cloud architectures & federated learning;

- personalized experiences & adaptive systems;

- in-sensor edge AI intelligence;

- vehicle-to-everything (V2X) & edge cooperation.

Our Edge AI Computing Expertise

As an expert in edge AI computing and automotive intelligence, the devabit team can assist you in developing cutting-edge solutions like:

- Event / Incident Detection

- Predictive Maintenance & Diagnostics

- Infotainment / Personalization / Voice Assistants

- V2X / Cooperative Perception / Shared Mapping

- Road / Surface Condition Monitoring / Anomaly Alerts

Did not find the tight solution on the list? Contact our edge AI consultancy team, and we will come up with a tailor-made solution roadmap that perfectly solves your business challenges.

The Future of Edge AI Computing: What Is Next?

At the end of the day, it is clear that edge AI computing is no longer an experimental innovation but a robust infrastructure that demonstrates meaningful impact. As edge AI computing models evolve at a rapid pace, hardware gains broader capabilities, resulting in the rise of autonomous industrial systems and vehicles, more advanced local analytics in healthcare, and smarter, more responsive social spaces. Despite all its mind-blowing features, edge AI computing does not replace cloud-based AI systems. Instead, edge AI computing will bridge the gap between local intelligence and centralized capabilities.

Organizations that invest in edge AI computing today, building a robust strategy across both layers, will soon gain a significant competitive advantage and get the most out of intelligence wherever and whenever it is needed. Want to join the cohort of those who lead the edge? Drop us a line to start your edge AI computing journey driven by experts in the field.

Recent Publications

Don't miss out! Click here to stay in touch.

Discover More Edge AI Content

Relevant Articles View all categories

View all categories CONNECT WITH US WE’RE READY

TO TALK OPPORTUNITIES

THANK YOU! WE RECEIVED YOUR MESSAGE.

Sorry

something went wrong